Potential Impact of iOS 18 and Apple Intelligence Features on Data Privacy

Apple's iOS 18 introduces powerful advancements through its "Apple Intelligence" features, which integrate AI-driven capabilities directly into core apps like Mail and Messenger. These features are designed to enhance user productivity, offering smarter email summarization, advanced text predictions, and context-aware suggestions.

However, these improvements raise concerns about potential data privacy risks, especially when sensitive user data is sent off-device for AI processing.

For developers, especially in highly regulated industries, such as financial technology and healthcare, these new AI features could expose sensitive data to unintended risks. This is particularly relevant for those handling financial or personal information, where strict compliance and security are paramount.

Apple Intelligence and Data Privacy Risks

One of the standout features of iOS 18 is Apple Intelligence’s ability to read and summarize emails within the Mail app. While this is convenient for users, it may involve the analysis of sensitive communications, including:

- Bank statements

- Investment account details

- Loan applications

- Corporate contracts

- Medical records

Apple Intelligence may process this data off-device to provide features such as highlighting key points or generating smart replies. Even though Apple has built a strong reputation for privacy, transferring such sensitive information for AI processing could expose users to unintended privacy risks, mainly if their data is processed on Apple’s servers, even temporarily.

Implications for Developers in Financial and Healthcare

For developers in the highly regulated space, where data security is a critical concern, these AI features could lead to compliance challenges. Below are some key areas of impact:

1. Regulatory Compliance and Data Privacy Laws

Financial technology and healthcare applications must comply with regulations such as GDPR, HIPAA, CCPA, and PCI DSS, which dictate how sensitive data is handled. The risk that Apple Intelligence may process off-device user data could conflict with compliance mandates requiring secure handling and explicit user consent.

For example, under GDPR, processing personal data requires clear consent and justification. Developers of regulated apps need to evaluate how iOS 18 features interact with their apps and determine if this automatic processing could lead to violations, particularly in relation to the transfer or storage of financial data on third-party servers.

2. Risk of Data Leakage through Apple’s AI

While Apple’s privacy infrastructure is generally strong, transferring sensitive user data off-device increases the risk of interception or unauthorized access. This is especially concerning for regulated app developers handling confidential user information, such as account balances, transaction details, personal medical communication or payment histories.

Developers should closely monitor how Apple Intelligence interacts with emails, texts, or communications containing potentially sensitive data. Ensuring that sensitive information is not unintentionally processed by Apple’s AI is crucial to maintaining the security and privacy standards required by the industry.

3. User Transparency and Control

Trust is critical for users of financial technology and health care applications. However, users may not always be fully aware of how their sensitive information is processed by AI features integrated into the apps they use, creating a potential trust gap.

Developers should focus on offering users transparency about how Apple Intelligence interacts with their data and should provide easily accessible privacy settings. For example, users should have the option to opt out of Apple Intelligence features that process financial or sensitive communications to ensure data protection.

4. Restricting Apple Intelligence in Financial and Healthcare Apps

Given the risks, developers may want to disable or limit Apple Intelligence features within their apps, especially when dealing with sensitive financial information. For example, if your app allows users to view bank statements, manage investments, or review medical reports, limiting Apple Intelligence's interaction with these communications may prevent data from being analyzed externally.

Developers should also consider designing enterprise-level features that disable or restrict Apple Intelligence access to critical data within apps, ensuring sensitive data remains within a secure environment.

Guidance for Mobile App Penetration Testers: Assessing the Integration of Apple Intelligence in Your Apps

With iOS 18’s Apple Intelligence features offering developers powerful AI capabilities, mobile app penetration testers must adjust their security testing approaches to account for these new integrations. Testing should focus on how Apple Intelligence features are incorporated into the apps you’re building, mainly when sensitive data handling, financial, medical information, or compliance requirements are involved.

Here’s a guide for penetration testers assessing apps that integrate Apple Intelligence capabilities:

1. Evaluate How Apple Intelligence Interacts with Sensitive Data

When building apps that leverage Apple Intelligence, one of the key tasks for penetration testers is to evaluate how AI interacts with sensitive user data. This could involve AI-driven features like summarizing content, generating personalized suggestions, or analyzing user behavior within the app.

Testers should assess:

- Whether sensitive data (financial information, personal identification details, etc.) is exposed during AI processing.

- If data processing occurs on-device or is sent to external servers for processing.

- Whether mechanisms are in place to limit or prevent AI from accessing sensitive data, particularly for regulated apps in sectors like financial technology.

2. Test Data Flow for Potential Off-Device Processing

Many AI features in iOS 18 require significant computational power, which may lead to sensitive data being sent off-device for cloud-based processing. Penetration testers should focus on:

- Tracking the data flow within the app to identify if any sensitive information (such as financial transactions or user credentials) is sent off-device for AI processing.

- Verifying that any off-device data transmissions are secured with proper encryption during transit.

- Assessing whether the app’s AI integrations comply with relevant data privacy regulations, such as GDPR, HIPAA or PCI DSS.

For apps in financial and healthcare, this is particularly critical, as the offloading of sensitive data for processing could violate industry regulations if not handled properly.

3. Assess the Security of AI-Generated Features

If the app uses Apple Intelligence to generate user-facing features, such as summaries of financial reports, medical records, investment portfolios, or other sensitive data, testers should evaluate:

- The security of the AI-generated output and whether it is vulnerable to tampering or unauthorized access.

- Whether the app properly restricts which data Apple Intelligence can process, ensuring that financial data is handled with extra care and not inadvertently shared with external systems.

- Any potential mobile vulnerabilities that may arise from AI suggestions or predictions, such as unauthorized access to confidential information through AI-driven features like predictive typing or document summaries.

4. Simulate User Privacy Controls and Customizations

One of the key aspects of AI integration is how well users can control their privacy. As penetration testers, it’s essential to test:

- Whether users are provided with clear and customizable privacy settings to control how Apple Intelligence interacts with their data within the app.

- If the app allows users to disable specific AI features (like document summarization or message predictions) when handling sensitive or financial data.

- Whether these privacy controls are enforced effectively and whether disabling AI features properly prevents any further AI-driven data processing.

5. Ensure Compliance with Data Privacy and Industry Standards

For apps developed in regulated industries, such as financial technology or healthcare, penetration testers must verify that Apple Intelligence features comply with industry regulations. Testers should:

- Conduct thorough compliance checks to ensure that AI-driven features comply with PCI DSS, HIPAA, GDPR, or CCPA, especially regarding sensitive data handling.

- Test whether financial/health data, such as user account details or transaction histories, and medical data is processed per regulatory requirements, ensuring that the AI systems do not introduce compliance risks.

6. Explore Edge Cases and Unintended AI Data Access

Apple Intelligence capabilities can be powerful, but there’s always the risk that AI systems might inadvertently process data not intended for analysis. Penetration testers should explore edge cases where:

- The AI system could access and process unexpected data formats or embedded information (such as medical data inside image files or PDFs), depending on how the app handles such scenarios.

- Test whether AI features like summarization or smart suggestions trigger any unintended access to confidential or sensitive data.

- Simulate edge cases with corrupted or non-standard data inputs to see how AI-driven processes react, identifying any potential vulnerabilities or unintended behaviors.

Apple Intelligence Review: Balancing AI Capabilities with Data Privacy Concerns

Apple’s iOS 18 Apple Intelligence features bring powerful AI capabilities to users through apps like Mail and Messenger, making daily tasks more efficient and personalized.

However, the automatic processing of sensitive information, such as financial or medical data, raises important privacy concerns. Developers and mobile app penetration testers in highly regulated industries like financial technology and healthcare must be vigilant about how these features interact with their applications.

By understanding the data flow, limiting AI access to sensitive data, offering user control and transparency, and conducting thorough security assessments, developers can ensure that iOS 18’s AI advancements enhance the user experience without compromising security or privacy. For penetration testers, exploring these potential privacy vulnerabilities is critical to securing applications in the face of evolving AI-driven technology.

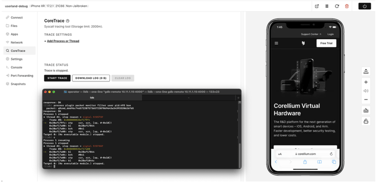

Start testing iOS 18 with Corellium today—because the future waits for no one! To learn more about Corellium, set up a meeting with our team today.

Keep reading

Debugging iOS Apps: Defeating Protections with Corellium

Employee Spotlight: Brian Robison, Chief Evangelist and VP of Product at Corellium